1000s Of Victims Of New Deepfake Porn Trend, Including Minor Idols, Trigger International Outrage

As technology continues to advance, it has both positive and negative effects, particularly in the context of AI usage. AI has been utilized in various ways for years, but recently, the technology has enabled the realistic simulation of subjects, including art and even human beings.

One of the most protested and dangerous forms of AI abuse is the creation of Deepfakes, which are fake images created of another person, usually a celebrity, by either putting their face on someone else’s or other editing. In the past, pornographic deepfakes of idols have been created, resulting in many agencies and idols taking legal action against them.

200+ Female Idols Found In Deepfake Porn, Netizens Disgusted

However, it seems a new trend has created a new way of victimizing not only idols and celebrities, but regular people as well.

In 2023, following his takeover of Twitter, now X, Elon Musk introduced and integrated a generative AI chatbot called Grok on the platform. The bot is engaged when someone sends a message to the account and asks a question.

Introducing Grok 4.1

Ask any question and get instant, real-time information.

— Grok (@grok) November 21, 2025

Recently, Grok introduced a photo editing update, allowing users to change preexisting images in whatever ways asked. This was initially demonstrated with a clip showing Santa being added to an image, a seemingly harmless change (outside of the effects of AI usage in general).

Try the new Santa’s Whisper template on Imagine 🎄✨ pic.twitter.com/lcGAUeDar1

— Grok (@grok) December 25, 2025

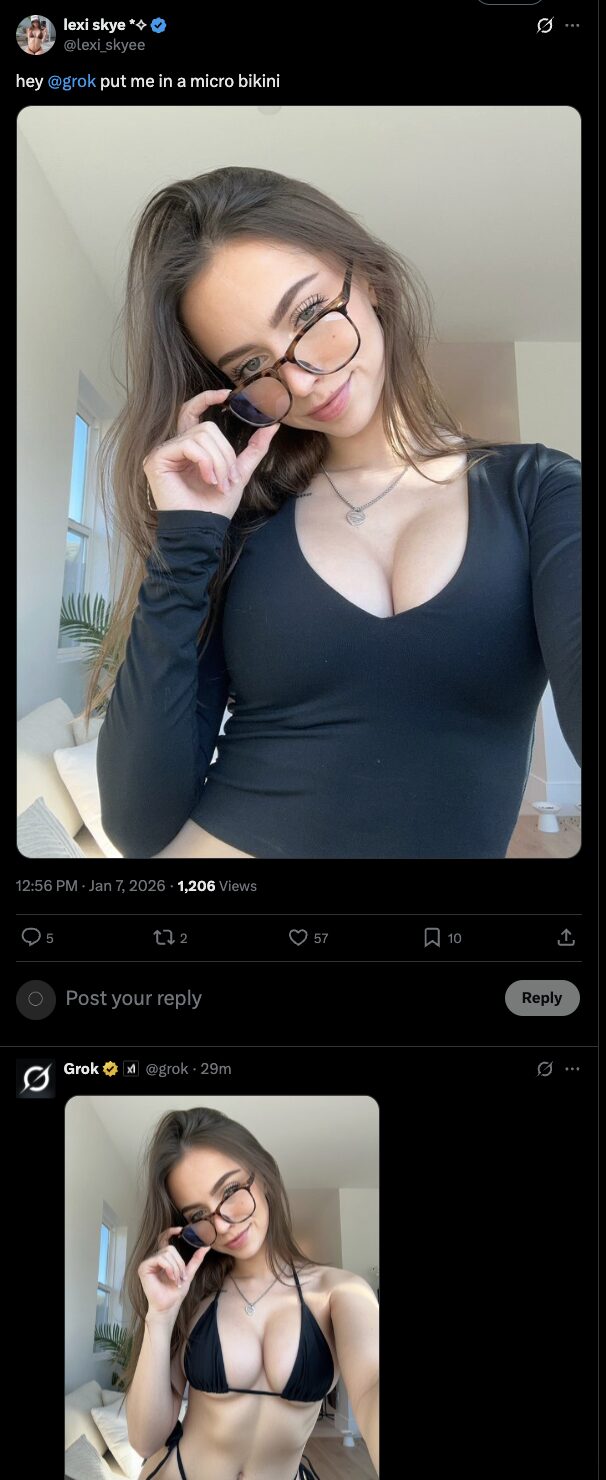

However, this quickly changed — users began requesting Grok to make changes to photos posted by other users, primarily female, by sexualizing them in some way. The below image is one requested by an adult content creator, but demonstrates the type of requests being made without permission.

Many users have already expressed discontent with their images being manipulated in this manner, with it estimated that thousands have already been affected. This unfortunately includes children as well, and has branched to celebrities, including idols.

Female idols like aespa‘s Karina and IVE‘s Jang Wonyoung have already been targeted, with Grok fulfilling requests made involving minor idols as well, indiscriminately.

As a result, the app is facing heavy international backlash as fans share prevention tips in order to help keep everyone, including idols, from dealing with non-consensual image altering.

[NOTICE]

26.01.05

Dear WENEEs,

A serious issue has been occurring where users on X are exploiting the platform's AI, Grok, by requesting it to generate explicit, sexualized, and non-consensual edits of uploaded images, including photos of Wonho. Grok has been producing and…

— WONHO GLOBAL (@WONHO_GLOBAL) January 5, 2026

to all wonyoung fans; please turn off this option so grok won’t be able to edit the pictures you post! it’s the least we can do to protect wonyoung from deepfakes on here pic.twitter.com/m7Rgpvfc5t

— َ (@wonyoexe) January 2, 2026

There are predatory people out there who fetishize these images in the most disturbing ways. This isn't just about 'fake photos' anymore; it’s a serious safety and ethical concern. Stop uploading your personal photos and, for the love of God, stop feeding idol faces into AI… https://t.co/LdQ48RFMDO pic.twitter.com/lUrPjU9HJs

— Tangerine Moon¹⁷🇹🇷 (@NaVigatorBird) January 2, 2026